In a post first published on The Scholarly Kitchen blog, Nina Di Cara and Claire Haworth discuss the Data Hazards project and how it is being applied to improve how data ethics are identified and communicated in mental health research.

Predicting mental health from Twitter

We create a huge amount of data about ourselves every day by interacting with technology. We might use social media to express how we are feeling, use particular apps when we feel a certain way, or regularly purchase the same items. All together this data is a powerful reflection of our habits, our activities, and sometimes our health.

Most of us are familiar with this information being used for targeted advertising on the internet, but digital information is increasingly being used by researchers to help better understand and prevent health conditions.

Our research in particular focuses on how our tweets and social media posts can indicate changes in our mental health over time, because what we choose to say and how we say it can be reflective of our emotional state. We have been doing this by using Twitter data that participants in the Avon Longitudinal Study of Parents and Children (ALSPAC, known to participants as Children of the 90s) birth cohort have kindly agreed for us to access (ALSPAC is a longitudinal study focused on individuals born in the early 1990s in and around Bristol, UK, and their families).

This gives us a great opportunity to understand how people’s digital data matches to how they are really feeling, rather than assuming that, just because they are using sad words, they must be sad. We can do this because the ALSPAC participants have been completing mental health and well-being questionnaires for many years, which can then be matched with their Twitter data.

Research like this can have many potential applications, like being able to see how groups of people are responding to difficult events or helping people to track their own mental health over time.

But, as with all new technologies, we also have to be mindful of how these technologies could be used in the wider world, sometimes in ways we don’t expect. How do we decide what the potential risks are, and should we stop doing the research completely if there are risks, even if it has the potential to help?

The process of doing responsible research can be complex, and for data science research we are still coming across problems in applying traditional ethical values to research that uses machine learning and artificial intelligence. That’s where the Data Hazards project comes in…

Data Hazards

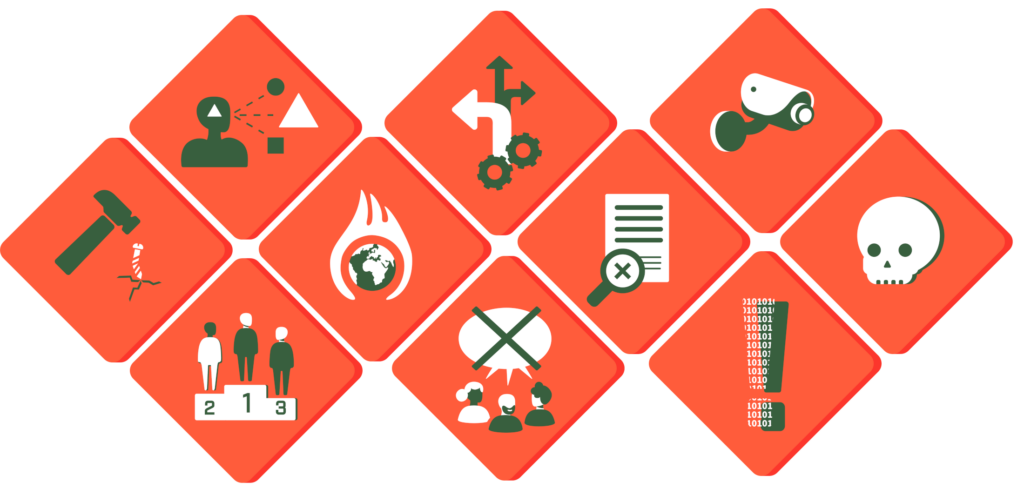

The Data Hazards project is about identifying the ethical risks of data science. It asks us to think about data science risks in a similar way to chemical ones – we need to be aware of what the risks are so that we can figure out how to make things safer and avoid people getting hurt.

The project was started by Nina Di Cara and Natalie Zelenka in 2021, inspired by conversations about recurring ethical hazards that came up at the Data Ethics Club discussion group, and a worry that data scientists did not have enough opportunities to learn about ethics.

So, how does it work?

We all know that bleach can be dangerous, but that doesn’t stop us from using it in our homes in a sensible way! Other chemicals are much more dangerous and can only be used in a controlled way, by those who know how to manage their risks and have the equipment and training to do so. Why not have similar considerations for data-based tools?

There are currently eleven Data Hazards that each represent a different risk, with examples and potential safety mitigations for each. The Hazards themselves are part of an open source project, meaning that they have been developed over the past two years through a series of workshops with interdisciplinary researchers. In the future we may add more Hazards based on feedback and in response to changing ethical issues.

The tricky bit is that, when thinking about our own projects, potential risks are not always obvious at first. Our life experiences might mean we don’t see ethical concerns in the way that other people do, and also won’t go on to experience negative outcomes in the same way. This is why it is important to talk to a range of people with different types of experience to get a full picture of how using data in different ways could impact on someone’s life.

The ethics of mental health inference from social media

To explore what the ethical risks and mitigations might be in our research on tracking mental health through social media we asked a variety of participants in workshops we have run over the past 18 months what they thought of our research project. By doing this, we heard many different views on the ethics of mental health prediction from social media from people in different disciplines and with different relationships to the research. This helped us to develop our strategies for mitigating risk.

Here are examples of the Data Hazards that people thought applied to our project, and why. They included Privacy, Reinforces Existing Bias, Ranks or Classifies People, and Danger of Misuse.

While these Hazards cover several of the risks related to using Twitter in mental health research, at the time of the workshops Twitter had not yet started charging fees for access to their data. This is a new and evolving risk we may need to take into consideration, and reflects how important it is that our ethical considerations can account for the speed of changes in technology.

PRIVACY

There is no risk to privacy for participants in the study, since their data is protected by the central cohort study team, and they have consented to it being used in this form. However, there could be a risk to privacy if someone maliciously attempted to predict an individual’s mental health using predictive models, if we published and shared them.

One way to mitigate this would be to not share our exact models for predicting mental health except with verified researchers, who have been granted ethical approval for their studies.

REINFORCES EXISTING BIAS

This Hazard applies because the models being generated will learn patterns from the data being inputted about participant mental health. The ALSPAC cohort sample was designed to reflect the population of Bristol (a city in the southwest of the UK) in 1991/2 and does not include many people from ethnic minority backgrounds or from anywhere apart from Bristol. As a result, there is less training data available for people who are not White, or from other areas of the world. Even across the UK the way people use language may differ and this could inadvertently lead to bias.

Bias is something that we are able to test for, and so we know that we need to do this with our models to see if they are behaving fairly before using them in practice. We would also love to be able to test our models on other datasets from around the world to see if we get different results.

RANKS OR CLASSIFIES PEOPLE

The models made as part of this project could be used to rank or classify individual mental health states. Ranking and classification has a problematic history, and the definitions of categories can be heavily influenced by cultural and societal expectations.

To mitigate this, we choose to focus on predicting a continuous value from a scale, rather than whether someone has a particular mental illness.

DANGER OF MISUSE

Inferring mental health states from public social media data has the potential for misuse by those capable of using models to infer information about people who were not in the original study. Those people will not have consented to this sensitive information being shared about themselves, which could be problematic whether the models are accurate or not.

One way to mitigate this is, once again, to treat models as being sensitive research information, and only sharing them with verifiable researchers. Another mitigation might be to make sure models rely on having access to some information that can only be provided by the user, so that they would be ineffective unless the individual had consented for their use.

The risks of not doing

We have spoken through the potential pitfalls of doing this type of research, but it can also be important to consider what the risks are of not doing the research. Who may lose out if we do not work on this problem?

It is a tricky balance to decide if all research should be permitted, especially in the context of today’s debates about academic freedom. Certainly, this is the role of the research ethics committee, although they are not always well prepared to make these decisions about data science research.

In this case, our approach to the problem is to provide high-quality data and evidence so that we can get an accurate idea of how good these technologies can be. So far, a lot of research in this field has used poor-quality data to make some big claims. Using the data we have worked hard to collect from a reliable source, we would like to show when and why these methods for predicting mental health with social media do or don’t work.

We hope by doing this we will be reducing harm, setting realistic expectations about what technology can achieve, and improving the digital future for us all!

What’s next?

The Data Hazards project was developed to help researchers to better identify and communicate ethical risks that come from their data science work. As we’ve shown here, there can be many different risks to consider, and we would love for this to be an example of how we can communicate risks in a sensible and easy to understand way.

The Data Hazards project is always inviting new perspectives and feedback, and all our resources are openly licensed for others to use (CC BY 4.0), so if you are interested in finding out more or suggesting new and improved labels then please get in touch via our website!

We hope that if more of us speak about data science risks early in our research processes, and plan for them throughout our work, it will make it much easier to work together to prevent negative outcomes for all of us.